In the last blog we examined the tradeoffs and balance between the functionality, the accuracy and the design of a neural input wristband and we described the system-engineering approach of hardware, software and humanware. In this blog post we will dive into the system specification guidelines needed to create an optimal neural input wristband.

Successfully bringing a high-tech neural interface to the market involves carefully balancing three important parts: advanced sensors, smart processing systems, and user-friendly designs. Getting this balance right is very important, and it means making sure all these parts work well together.

The sensors' job is to gather useful information, which the system then uses to give us insights. The product combines the sensor and system to make things easy for the user. Making sure each part meets its requirements and works smoothly with the others is crucial.

Figuring out the sensor specs is relatively simple and well-known. The sensor is attached to an electrode touching the skin surface area, and it needs to give us clear signals and a wide dynamic range. The clearer the signals, the better we understand what the user's intent. Sensor-specification parameters include low input-referred noise, wide dynamic range, high input impedance, high common-mode rejection ratio (CMRR), and high power-supply rejection ratio (PSRR).

Since we already understand sensor specs well, this blog will focus on the system specifications. The system has to handle lots of data in real-time, using less computing power, and the product needs to be comfortable and tough for different people and situations.

System specifications parameters include

Reach

Accuracy

Latency

Computing power (measured in MAC operations).

Neglecting any of these components can lead to a suboptimal product and user experience.

The relevant discussion begins at 20:20

Reach

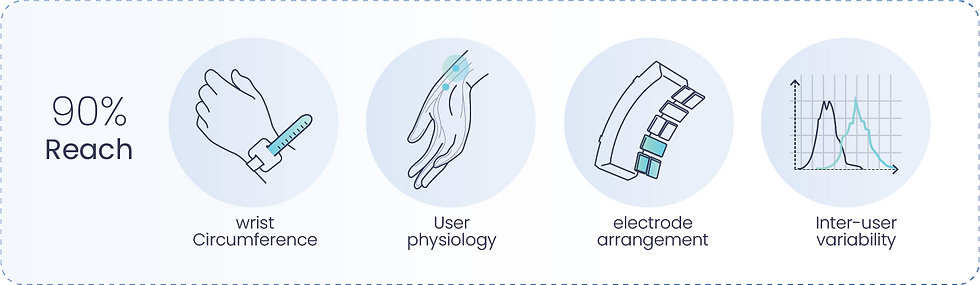

For a neural interface to be successful, it needs to work well for a large portion of users, at least 90% of them. This is called "reach." Reach is influenced by things like wrist size, users' body, and how the electrodes are set up. The signals our bodies make are unique and vary, which makes it challenging to get accurate results.

Here are some important factors that affect reach:

Wrist Size: People have different wrist sizes, so the signals we pick up can be different for each person, even if they do the same action.

User Physiology: Each person's body is a bit different, especially the nerves in their wrist. This affects the signals we get when they do something. We need smart algorithms to handle this.

Electrode Arrangement: The way the electrodes are placed is important. Since people's wrists are different, the signals we get can be really different too.

Variability Between Users: Because everyone's body is unique, sometimes we might get signals that look the opposite of what we expect.

Actual finger movement (black line) vs. system prediction (yellow line)

The most common challenge for high reach is the case of an electrode detachment from the wrist skin surface. In that instance, a “missing” signal pattern is generated. To overcome this challenge, we need to focus on the design of the band and its curvature, the design of the electrodes, and for some extreme user-cases introduce a settings option to adjust the signal intensity.

We may also introduce a calibration algorithm procedure in which the user provides a small sample set of each relevant gesture and using this data, a classifier algorithm “tunes” the neural network weights to the specific user physiology to overcome such challenges.

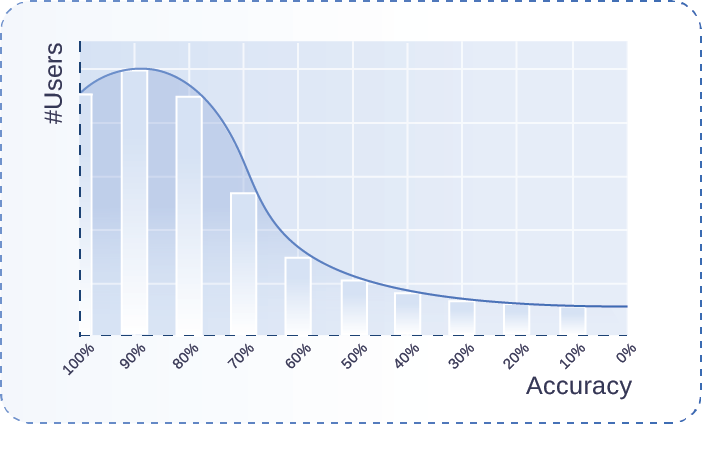

Accuracy will vary across different users (illustration)

Accuracy

Accuracy in a neural interface refers to its ability to correctly recognize and interpret user inputs, particularly finger movements and their corresponding signal patterns representing gestures. To ensure high accuracy, algorithms must account for both intra-class and inter-class variability. Given the recent hype around artificial intelligence, it’s easy to overuse the term. But for signal pattern matching, deep learning AI, which some companies have been developing for almost a decade have proven effective. Accuracy typically trails off as the user-set-size expands.

For an interface to feel reliable, the accuracy should be at least 96.0%.

A high occurrence of random or constant false positives exceeding an acceptable error rate erodes user trust in the interface. This lack of confidence leads to decreased usage and adoption. Achieving and maintaining high accuracy is vital for user satisfaction and successful implementation of neural interfaces.

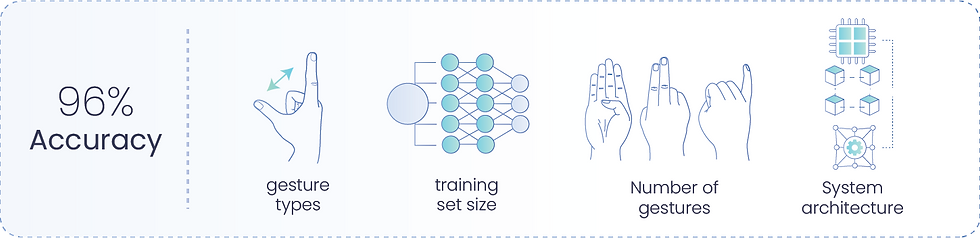

The factors that affect accuracy are the gesture types, the training set size, the number of gestures, and the system architecture.

Gesture types: the human hand has an extremely complex anatomy. It consists of 27 bones, 27 joints, 34 muscles, over 100 ligaments and tendons, numerous blood vessels, nerves, and soft tissue. It is not difficult to imagine that an index finger movement may generate a signal pattern similar to that of a soft tap of the index finger on the thumb.

Training set size: the more you train a neural network the more accurate the classifier will be. So the more patterns you introduce to the algorithm, the more you increase the likelihood of a correct classification. Once again, this is where thoughtfully implemented deep learning AI is critical.

The number of gestures: the more gestures you have to classify, the more data the classifier will require. Moreover, the data sets will necessitate increased variety, as we’ve explained in inter-user variability.

System architecture: the algorithmic approach and the neural networks you design, build and train, will have extraordinary effect on the accuracy and generalization ability of the product. Let’s belabor the point, for it cannot be overstated; the rapid adoption of artificial intelligence will simplify this task immensely and remove previous technological hurdles.

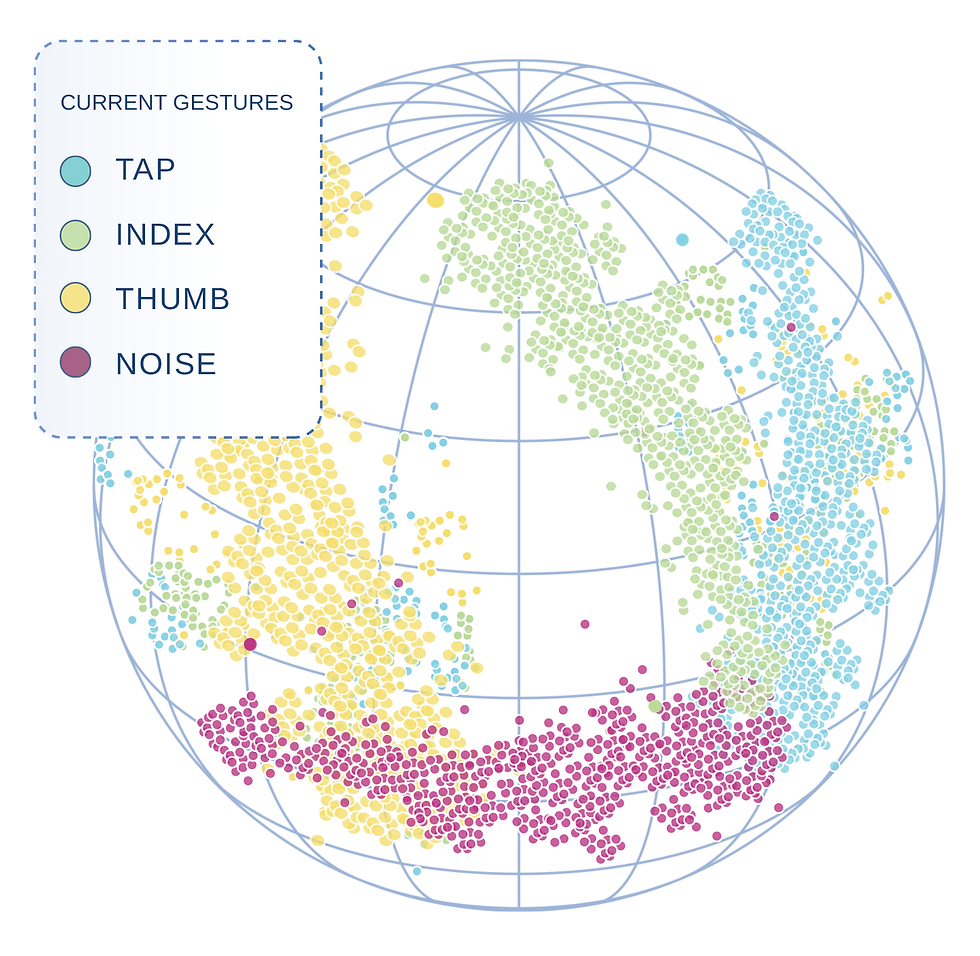

Accuracy is manifested by classifying a true positive. Noise or false positives can occur anywhere in the feature space and present a challenge for accurate classification. The objective is to develop an AI mapping algorithm that effectively transforms the sensor space into a lower-dimensional feature space, ensuring adequate separability between gestures.

Unsurprisingly, users expect a cross-user accuracy rate of over 96% for the gesture set. Additionally, they expect a very low false positive rate, with an average of less than ½ a false positive per hour.

The gesture set should be natural and intuitive for users to perform. While some gestures like the so-called “bloom”-gesture may be easy to classify, they are not natural or intuitive. Achieving high accuracy necessitates addressing factors like intra-class variability, inter-class variability, false positives, and motion artifacts.

User-gesture variability mapping (illustration)

Latency

Latency refers to the delay or elapsed time between a user input gesture and the execution of a digital command. It represents the time it takes for the device to respond to the input. High latency results in a noticeable delay, causing a lag between the user's input and the interface's response.

To ensure a smooth and responsive user experience, it is desirable to have low latency. Generally, an acceptable latency range is around 40 - 60 milliseconds with a sweet point of 50 msec. For touch-based interfaces, a typical acceptable latency is 50 milliseconds. Keeping latency within acceptable range maintains a seamless user-interface interaction. Latency is affected by data packet size, the gesture types, the communication module, and the algorithm runtime.

Latency in a neural interface includes both algorithm-induced latency and the time taken to transmit a data packet from the interface to a digital device. Certain gestures are more sensitive to high latency, such as drag-and-drop input, which is a continuous gesture that requires longer processing time compared to a simple tap gesture, which is a discrete gesture.

Latency for a continuous interaction

The size of data packets is determined by the number of sensors and their sampling rate, while the transmission time is influenced by the communication module and its bandwidth.

Designing an interface with low latency is essential. Given the constraints of computation and memory-bandwidth in low-power computing devices, careful system design is necessary to meet user expectations. This involves considering both discrete and continuous gestures. The latency for an input device should ideally be below 50 milliseconds to ensure high responsiveness, precision, and intuitive interaction.

MAC

MAC, or Multiply and Accumulate operations, is a numerical metric that quantifies the total computational load and memory requirements of an algorithm. It measures the amount of processing power needed by the compute unit. Performing most computations on the interface devices themselves is important to minimize response time and conserve bandwidth.

The MAC is mostly affected by the number of gestures, algorithm architecture, gesture types, and the training set size.

Wearable devices with special computer setups, called SoC, have limits in how fast they can think. The number of math operations they do depends on how fast they get data from sensors and how the thinking process is set up. This can change a lot based on the sensor and how fast they're getting data, sometimes by a little, sometimes a lot. We often use a 60-millisecond time as a reference.

To calculate MAC, weighted computations involving input samples and weights derived from supervised learning optimization methods are performed. It encompasses the extraction of data from memory and the mathematical computations carried out on the input. Neural networks and computational graphs pose challenges in terms of compute time and memory usage. These resources are constrained in low-power wearables, where edge devices handle local computing and processing of data packets instead of relying on remote servers.

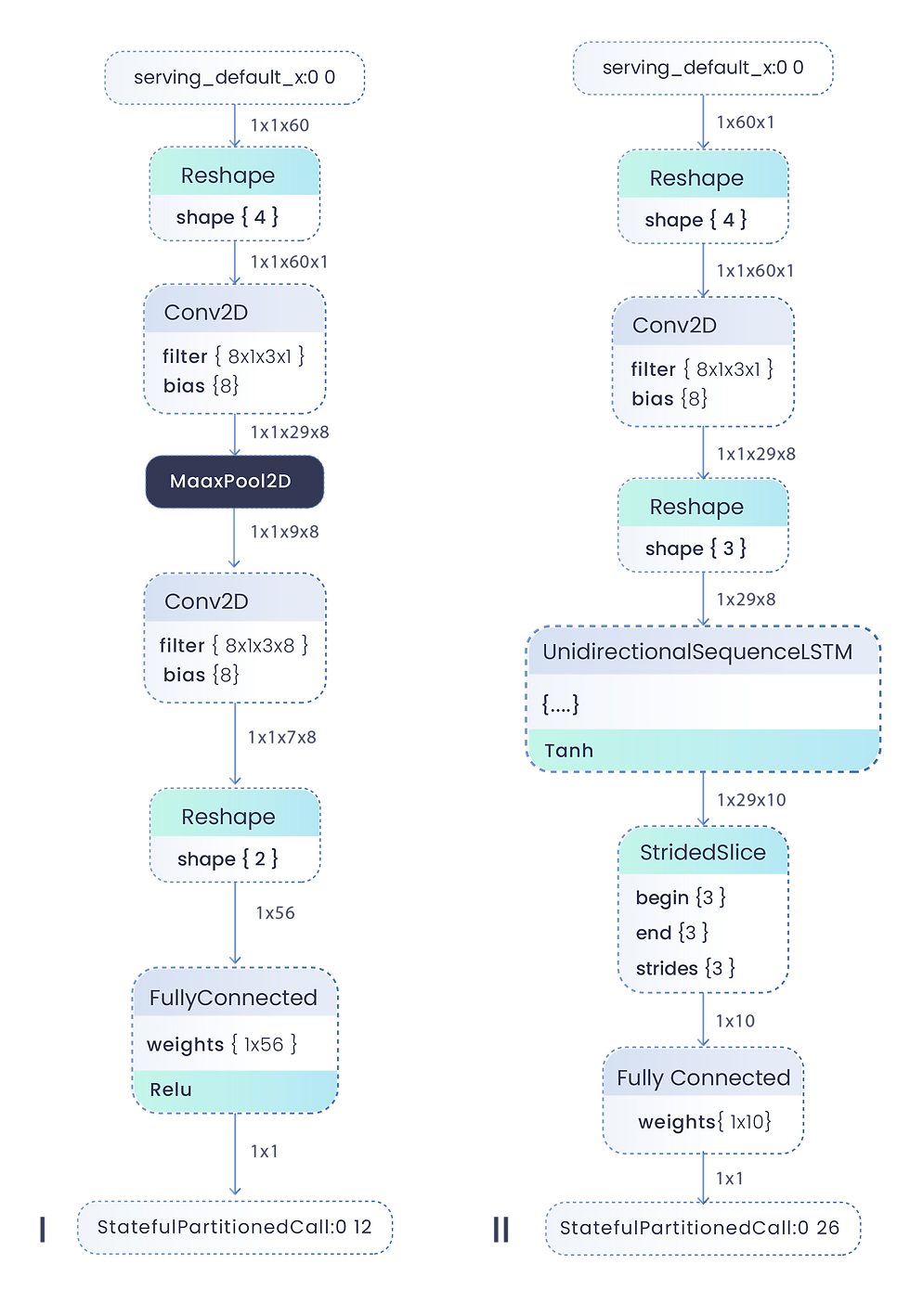

This figure illustrates two possible architectures for neural networks, with different layers and operations. The first architecture (left) consists of 2 convolutional layers and 1fully connected layer, totaling 2,348 Multiply and Accumulate (MAC) operations per 60 milliseconds. The second architecture includes convolution, Long Short-Term Memory (LSTM), and a fully connected layer, amounting to 822 MAC operations per 60 milliseconds of sensor data.

These tables summarize the number of parameters, MAC operations, and CPU usage for modern computer vision-based classifiers. The tables demonstrate that neural networks designed for computer vision tasks are significantly more computationally demanding compared to sensor-based AI systems. So, it would seem that inevitably, neuro-gestural recognition will be used for more than simplified and natural pointing functionality.

MAC operations serve as key metrics for resource-constrained classifiers and regressors, particularly in fixed-point signal processing. Given the limitations of CPU usage and memory bandwidth in low-power wearables, this metric is valuable when evaluating the feasibility of a computational architecture for neural input.

In the next blog post we will define the product specification guidelines, including number of electrodes and the band dimensions that are optimal for a neural input wristband.

*All figures shown in this blog are taken from our white paper, available for download here.

Comments